Artificial Intelligence Reasoning: Current Limitations and Perspectives

Explore the limitations of artificial intelligence reasoning and recent experiments, like those from Apple, that could change our perspective.

Explore the limitations of artificial intelligence reasoning and recent experiments, like those from Apple, that could change our perspective.

Key Points

In a world where advances in artificial intelligence (AI) are becoming increasingly frequent, one pressing question emerges within the tech community and broader society: Does AI really reason, or does it merely appear to do so?

After taking a look at the current context, it is clear that the surge of AI models has sparked unprecedented interest in deciphering this enigma. In this article, we explore some of the most relevant research and experiments in the field to shed light on the central question.

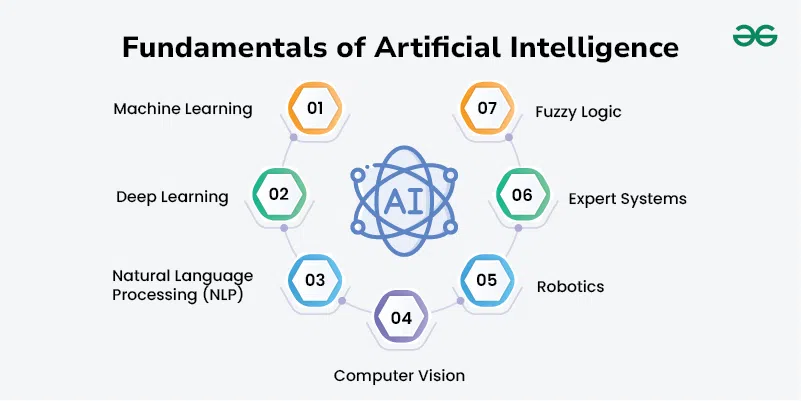

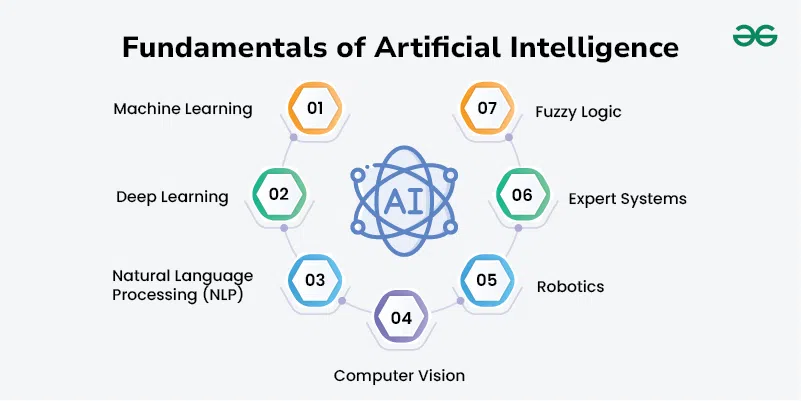

Simply put, AI reasoning refers to an algorithm's ability to interpret data and make decisions accordingly. Until recently, traditional AI models were designed to provide direct answers to specific questions, proving extremely effective in targeted tasks.

However, large-scale reasoning models (LRM) have emerged with a different proposition: new methodologies that promise broader and more adaptable reasoning. These models are currently undergoing rigorous tests to evaluate their capacity to reason in unprecedented situations.

One entity at the forefront of AI reasoning experiments is Apple. Its recent tests have employed some of the most classic computational puzzles to evaluate AI’s reasoning capacity. Popular paradigms include the Tower of Hanoi, checkers jumps, the River Crossing, and the Blocks World.

Each of these challenges has been meticulously designed to assess AI reasoning in controlled environments, where every move is verified and the difficulty is adjusted to the appropriate level.

The evaluation process involved comparing different types of models. Notably, the Claude 3.7 Thinking and DeepSeek R1 models were tested both with and without explicit reasoning. These tests employed extended token budgets and various metrics, such as "pass at K" and tracking the number of correct steps.

This approach aimed not only to evaluate the performance of the models but also to identify patterns and limitations in their reasoning.

Among the most significant findings, it was confirmed that for simple problems, models without explicit reasoning tend to perform better. However, in moderately complex challenges, models equipped with explicit reasoning demonstrated superior performance, albeit requiring significantly more resources.

Interestingly, none of the models tested were efficient at solving highly complex problems, where AI accuracy dramatically plummeted. This phenomenon has been dubbed the "counterintuitive scaling limit," where even with ample resources, the models seem to put in less effort as the difficulty suddenly increases.

One of the most apparent limitations in AI reasoning is its difficulty in executing long chains of logical thought, even when the solution is provided step by step. This issue is not due to a lack of memory but rather stems from an intrinsic inability to conduct symbolic reasoning over many stages.

For example, consider the Tower of Hanoi puzzle and the River Crossing challenge. Despite their apparent simplicity, these tasks require detailed logical reasoning—a capacity that, so far, AI systems have struggled to replicate efficiently.

Thus far, we have explored the essence of reasoning in artificial intelligence, examined Apple’s pioneering experiments, and taken an in-depth look at some of the most significant limitations in AI reasoning.

A recent academic article by cognitive psychologist and AI critic Gary Marcus suggests that models like those tested by Apple can only reproduce and recombine previously learned patterns rather than creating new concepts or engaging in true symbolic reasoning. According to Marcus, current AI does not genuinely reason like humans, nor does it follow classical algorithms.

Conversely, Analyst Brian and Programmer Godki argue that the observed limitations may simply reflect the design choices of the models—decisions made to conserve resources or the result of insufficient training. They also question whether symbolic puzzles are a valid measure of AI reasoning, suggesting that an inherent bias could exist in these tests.

Moving beyond puzzles, an evaluation of AI models was conducted using high school and university-level math questions. The results revealed a similar pattern: models performed remarkably well when faced with problems for which they had prior training data, yet their performance noticeably declined when encountering completely new challenges.

This tendency to excel on familiar datasets and falter on novel tasks underscores just how much remains to be explored regarding the limitations and potential of artificial intelligence.

Some critics suggest that the reasoning exhibited by AI models is merely a regurgitation of learned patterns rather than genuine thought. However, it cannot be ruled out that improvements in algorithm structure and token usage might lead to significant advances in their reasoning capabilities.

While some believe that refining current models could suffice, others insist on the necessity of adopting a completely new paradigm. Clearly, this field remains ripe for debate and divergent opinions as it continues to evolve.

In conclusion, recent experiments have illuminated the profound restrictions and paradoxes inherent in the current state of artificial intelligence. Despite considerable advancements, the ability of AI models to reason in a human-like manner remains, for the most part, an unresolved challenge.

Our understanding of AI and its reasoning capabilities affects practical applications as well as societal and philosophical expectations for the future. This makes it essential to continue exploring this rapidly evolving field.

We invite our readers to reflect, comment, and join the debate. Do you believe that artificial intelligence will eventually reason like humans? What is your perspective? We look forward to hearing your thoughts.